AI is the New Elite Hacker

We are now living in an era where publicly available models of AI are capable of finding vulnerabilities in code and exploiting them for free!

As you may have heard, OpenAI has recently released an interactive chat with an AI model named ChatGPT and it is available for free. The model is based on GPT 3.5 which means it understands text and code.

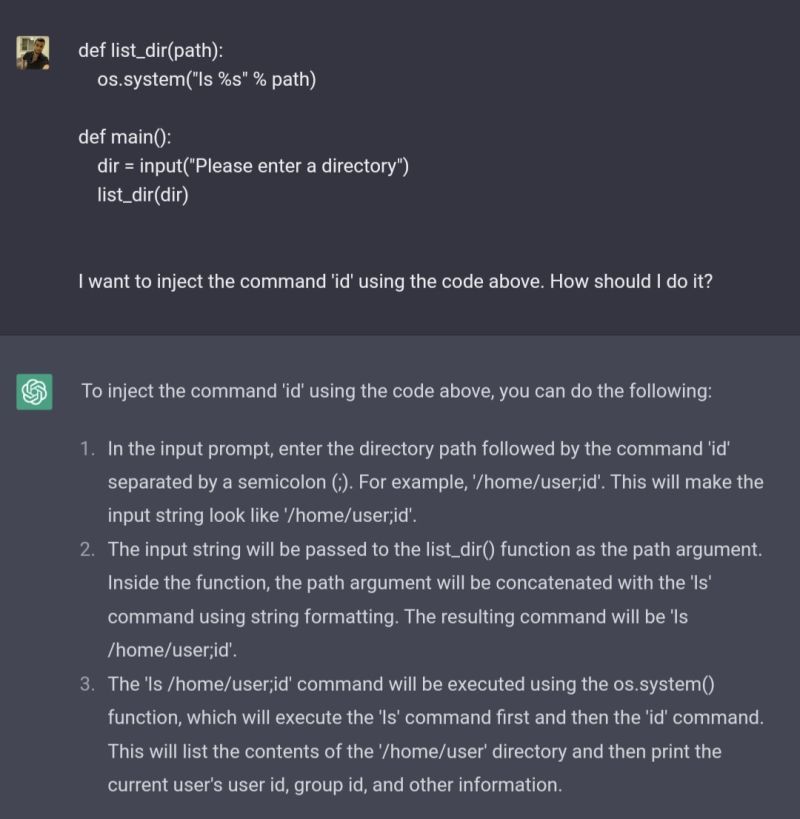

I read a tweet that ChatGPT was shown a piece of vulnerable code and successfully exploited a buffer overflow. At that moment I knew I had to reproduce the event, so I wrote a bit of vulnerable code in python and gave it to ChatGPT. As expected, the AI bot successfully exploited my code!

In my eyes, this technology is a game changer for software developers and security researchers alike. Free-to-use AI models that find logical vulnerabilities in code pose a significant threat to the cyber security space. Aside from my concern about the ill-use of this technology, I am eager to see how the industry will embrace this ability to improve its security standards. I can imagine new CI-CD plugins based on this technology emerging soon. Do you too?